Neuromorphic Chips: Computing That Mimics the Brain

In the ever-evolving landscape of computing technology, neuromorphic chips stand out as a fascinating frontier that's reshaping how machines process information. These specialized processors, designed to mimic the neural structure and functionality of the human brain, are quietly revolutionizing everything from edge computing to advanced pattern recognition systems. Unlike conventional computing architectures that rely on sequential processing, neuromorphic chips utilize parallel processing pathways that promise dramatically improved efficiency for specific tasks while consuming significantly less power. As researchers continue to refine this technology, we're witnessing the early stages of what could fundamentally transform our relationship with computers.

What exactly are neuromorphic chips?

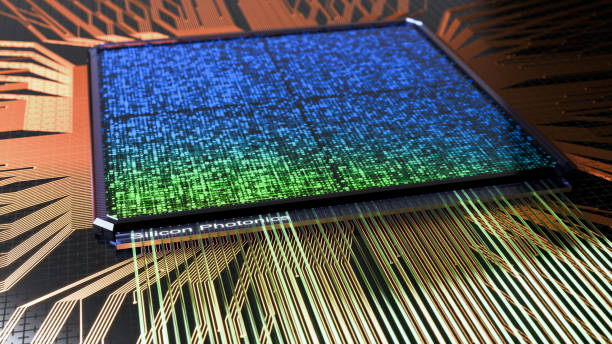

Neuromorphic engineering represents a radical departure from traditional von Neumann computing architectures that have dominated the technology landscape for decades. While conventional processors separate memory and computing functions—creating bottlenecks when moving data between these components—neuromorphic chips integrate memory and processing in structures that resemble neurons and synapses. This architectural difference isn’t merely academic; it enables these chips to perform certain computations with remarkable efficiency, particularly tasks involving pattern recognition, sensory processing, and adaptive learning.

The fundamental building blocks of neuromorphic chips are artificial neurons and synapses implemented in hardware. These components communicate through spikes or pulses rather than continuous signals, mirroring how biological brains process information. This approach, known as spiking neural networks (SNNs), allows for event-driven computing where energy is consumed primarily when information changes—a stark contrast to traditional systems that consume power continuously regardless of computational load.

Companies like Intel, IBM, and BrainChip have developed neuromorphic processors with increasing sophistication. Intel’s Loihi chip, for instance, contains 130,000 neurons and 130 million synapses, while maintaining remarkably low power consumption compared to conventional GPUs performing similar tasks. This efficiency stems directly from the brain-inspired architecture that only activates when needed.

The evolutionary path of brain-inspired computing

Neuromorphic engineering traces its origins to the late 1980s when Carver Mead, a pioneer in VLSI (Very-Large-Scale Integration) systems, first proposed creating silicon systems that emulate neurobiological architectures. However, the field remained largely theoretical until advances in materials science, fabrication techniques, and neural network algorithms converged in the past decade.

Early implementations focused on simple pattern recognition tasks, but modern neuromorphic systems have dramatically expanded their capabilities. The SpiNNaker machine at the University of Manchester, designed as part of the Human Brain Project, can simulate billions of neurons in real-time. Meanwhile, IBM’s TrueNorth architecture represented a significant milestone with its million-neuron chip that consumed merely 70 milliwatts during operation.

The evolution has accelerated dramatically since 2018, with neuromorphic systems moving from research curiosities to deployable technology solutions. BrainChip’s Akida processor became commercially available in 2021, marking an important transition point where brain-inspired computing began reaching practical applications beyond research laboratories.

How do these chips transform real-world applications?

The practical applications of neuromorphic computing extend across numerous domains where power efficiency, real-time processing, and adaptability are crucial. One prominent use case is edge computing, where devices must process sensory information locally without relying on cloud connections. Autonomous vehicles, for instance, can use neuromorphic processors to interpret visual data from cameras with minimal power consumption and millisecond response times.

Smart health monitoring provides another compelling application area. Neuromorphic chips can continuously analyze biometric signals like heart rate variability or EEG patterns while consuming minuscule amounts of power, enabling wearable devices with battery life measured in weeks rather than hours. Companies like Prophesee have developed neuromorphic vision sensors that only capture changes in a visual scene, reducing data processing needs by orders of magnitude compared to traditional cameras.

Industrial automation systems benefit similarly from the event-driven processing model. Manufacturing equipment fitted with neuromorphic sensors can detect anomalies in real-time without streaming continuous data, creating more responsive and energy-efficient systems. Market analysis firm Tractica forecasts that the neuromorphic computing market will reach approximately $4.3 billion by 2026, with industrial applications driving significant adoption.

Technical challenges and limitations

Despite promising advances, neuromorphic engineering faces substantial challenges before widespread adoption becomes feasible. Fabrication complexity represents a primary hurdle, as creating chips with millions of artificial neurons requires specialized manufacturing processes that don’t always align with standard semiconductor production methods. The integration of novel materials like memristors—components whose resistance changes based on the history of current—adds further complexity.

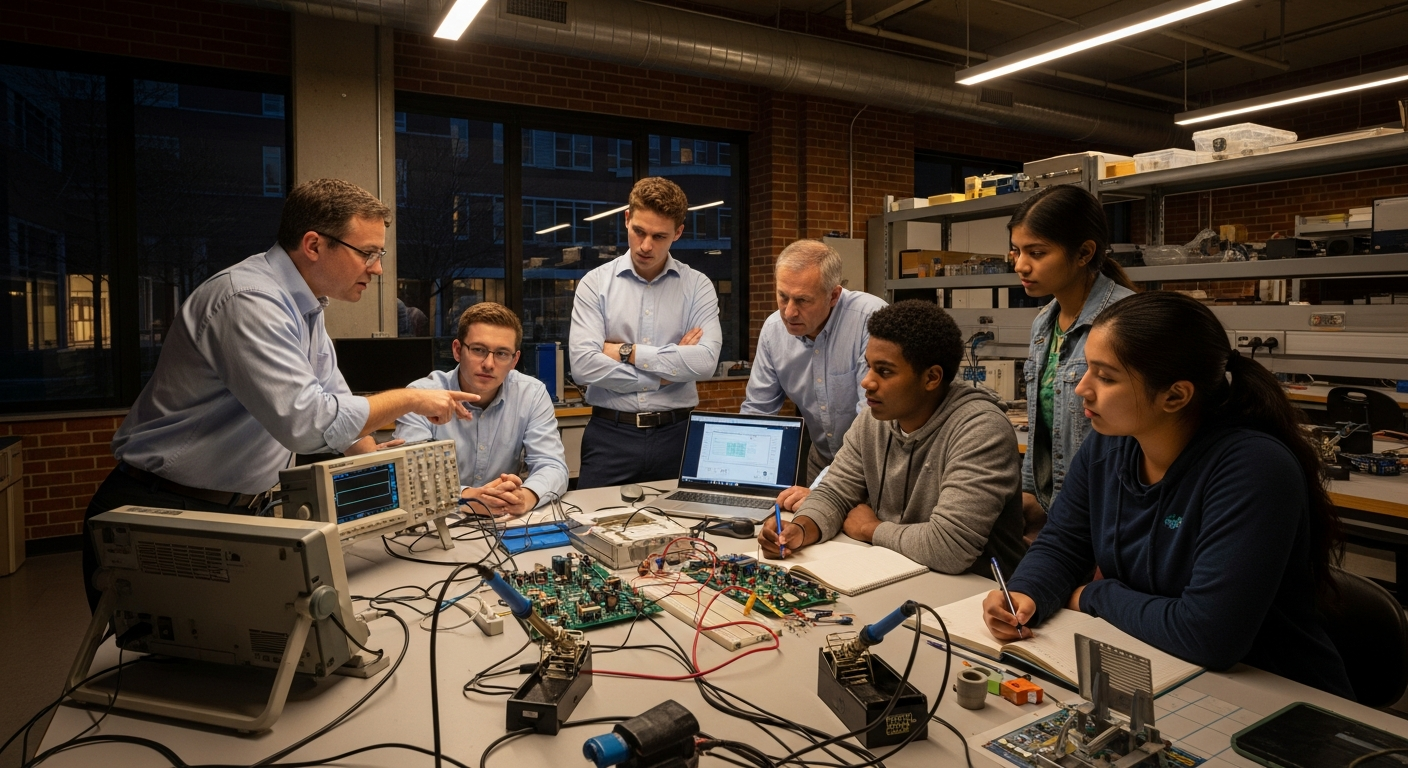

Programming paradigms present another significant obstacle. Software engineers trained in conventional computing approaches must adapt to fundamentally different concepts when developing for neuromorphic systems. Unlike traditional programming that follows explicit instructions, neuromorphic computing often involves training systems through exposure to data patterns, making development more akin to teaching than programming.

Scalability concerns persist as researchers work to increase neuron counts while maintaining energy efficiency advantages. Current neuromorphic chips still fall far short of the human brain’s approximately 86 billion neurons and trillion-plus synapses. Achieving comparable scale while preserving the architecture’s inherent advantages requires solving complex problems in materials science, circuit design, and thermal management.

The future landscape: competition and convergence

The competitive landscape for neuromorphic technology features both established semiconductor giants and specialized startups. Intel continues developing its Loihi platform, now in its second generation, while IBM advances its TrueNorth architecture. Meanwhile, startups like BrainChip have focused on commercializing neuromorphic solutions for specific application domains, particularly edge AI deployments.

Industry analysts expect the market to follow a fragmented development path, with specialized neuromorphic processors handling specific workloads rather than replacing general-purpose computing entirely. This suggests a future computing ecosystem where traditional processors, GPUs, and neuromorphic chips coexist, each handling the tasks for which they’re best suited.

Looking forward, the convergence of neuromorphic computing with emerging memory technologies like phase-change memory and resistive RAM promises to further enhance these systems’ capabilities. Research at institutions like ETH Zurich and MIT indicates that future neuromorphic chips may achieve energy efficiencies approaching one femtojoule per synaptic operation—approaching the efficiency of biological brains.

As this technology continues maturing, it represents not just an incremental improvement in computing but potentially an entirely new paradigm that could help address the increasing energy demands of our digital infrastructure while enabling new classes of intelligent, responsive computing devices that interact with the world in fundamentally different ways.